“Decoding LLMs, Transformers, and the Science of Natural Language Understanding”

Introduction

Hey Reader, ever feel like the world of AI is zooming past faster than a rickshaw on Delhi’s streets? You’re not alone! Just a year ago, I was wondering what those whizz-bang Large Language Models everyone was talking about. But then, I used one to write a Bollywood script in minutes — and let me tell you, it was mind-blowing!

So, if you’re curious about these AI marvels that can write like Rabindranath Tagore, translate languages smoother than butter naan, and even generate code like a coding ninja, grab your chai and settle in! In this series of blogs, we’ll talk about large language models, their use cases, how the models work, prompt engineering, how to make creative text outputs, and outline a project lifecycle for generative AI projects.

Attention is All You Need

Once upon a time in the ever-buzzing world of technology, a breakthrough occurred in 2017 that changed the way computers understood and spoke our language. It was a turning point that marked the birth of Large Language Models (LLMs), these super-smart algorithms that could chat, write, and understand words just like us humans — well, almost!

Yaar, imagine before the birth of LLMs! Your chatbots and assistants, were like eager friends, always ready to talk. But sometimes, their words felt a bit clunky, like trying to translate a song into another language — the tune was there, but the heart wasn’t quite right. They couldn’t quite grasp the “masala” of our conversations, the hidden meanings, and all that jazz.

Then, like a sage revealing a secret mantra, a group of clever minds from Google Brain, Google Research, and the University of Toronto introduced the Transformer model in a paper called ‘Attention is All You Need.’ This wasn’t just another tech gimmick; it was a game-changer for AI. Think of it like a super translator, not just of words, but of the whole ‘baat’ itself. It pays attention to how words connect, how they build the mood, and how they make us laugh or cry.

Breaking Down the Basics (Concepts and Processes)

GenAI: Generative AI, often shortened as GenAI, refers to a fascinating branch of Machine Learning focused on creating new things, not just analyzing or interpreting existing data. Imagine it as a digital artist, composer, or writer. GenAI learned these abilities by finding statistical patterns in massive datasets of content that were originally generated by humans. These Large language models have been trained on trillions of words over many weeks and months and with large amounts of computing power. e.g. BERT, BART, GPT3, BLOOM, etc.

Tokens: Tokens are the basic units of text that large language models (LLMs) use to process and generate natural language. Different LLMs have different ways of tokenizing text, such as word-based, sub-word based, or character-based. They’re the bridge between raw text and LLMs numerical world.

Example — Suppose we have a sentence “I love LLMs”. A word-based tokenizer would split it into tokens like ['I', 'love', 'LLMs']. A sub word-based tokenizer would split it into tokens like ['I', 'love', 'LL', 'Ms']. A character-based tokenizer would split it into tokens like ['I', ' ', 'l', 'o', 'v', 'e', ' ', 'L', 'L', 'M', 's']

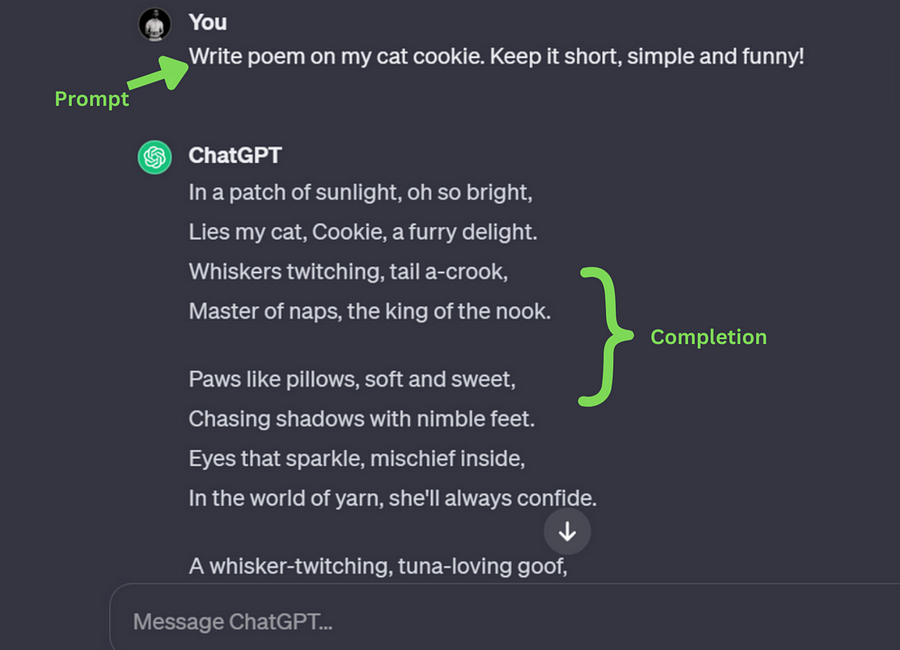

Prompt: Text you pass to the LLMs called prompt. It’s the input you give it that sets the stage for what it will generate.

Make sure to highlight the key details right at the beginning of your prompt. In this case, I forgot to mention that my cat is male, but ChatGPT assumed it was female. Why is it crucial to include important information in the first few lines? You’re about to discover a concept known as the Context Window in just a minute.

Context Window: How much conversation does it Remember?

In Large Language Models (LLMs) the context window refers to the span of text or number of tokens from the input that the model can consider at once while generating a response. This window defines the model’s capacity to reference and utilize information from the input text to create coherent and contextually appropriate outputs. Remember different models have different Context Window so it’s important to know before you use them.

Mechanism:

- Input Reception: The LLM receives a prompt or initial text as input.

- Window Initialization: The context window is initialized to encompass the first few tokens of the input.

- Contextual Processing: The LLM attends to the tokens within the window, extracting semantic and syntactic features.

- Token Generation: Based on the processed context, the LLM generates a new token, extending the text sequence.

- Window Progression: The context window slides forward, incorporating the newly generated token and excluding the oldest token, ensuring a dynamic focus on the most relevant information.

- Iteration: Steps 3–5 repeat until the model generates a complete text sequence or reaches a predetermined termination condition.

- Example:

- If the context window is 50 tokens:

- The LLM first considers tokens 1–50 of the input text.

- It generates token 51 based on this context.

- The window then shifts to tokens 2–51, and so on.

Transformer: The Engine Behind LLM Magic

In 2017, the landscape of AI language processing shifted dramatically with the introduction of the Transformer architecture. This groundbreaking model, dubbed “Attention is All You Need” in its seminal paper, revolutionized how machines understand and generate language. But what exactly is the Transformer, and how does it work?

Was that too much? Here’s a simpler version for you to remember.

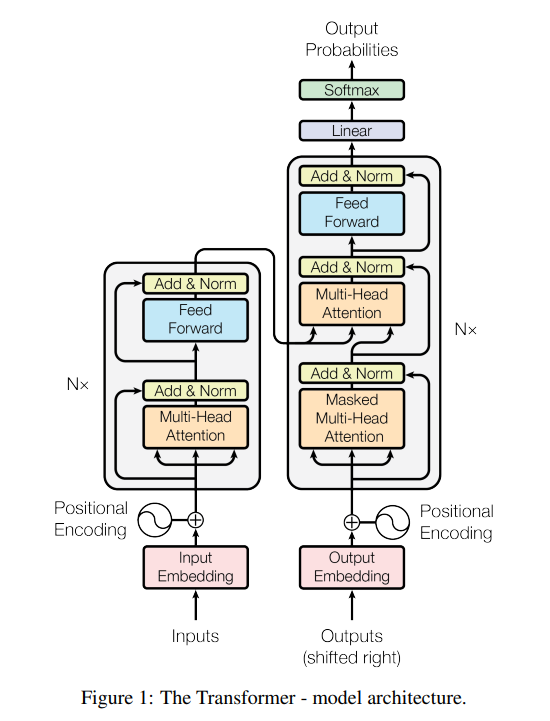

Here’s a simplified breakdown of the key components that make the Transformer tick:

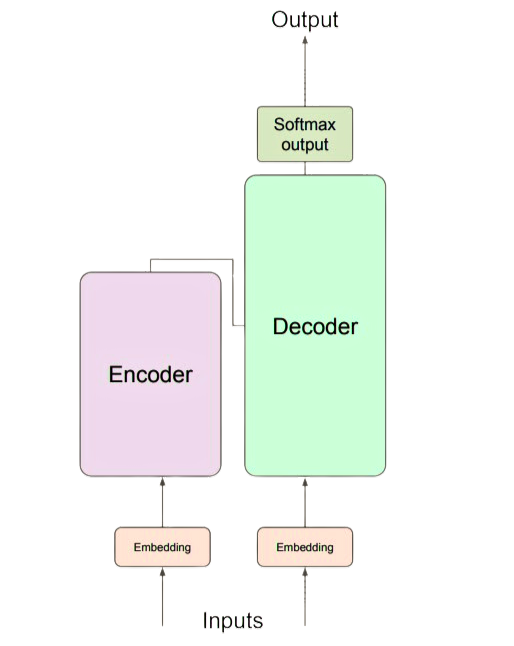

Encoder-Decoder & Embeddings

- Encoder-Decoder: The Transformer architecture follows an encoder-decoder structure. The encoder analyzes the input text (the sentence you want to translate, the poem you want to write), identifying key relationships and building a rich representation of its meaning. The decoder then uses this information to generate the output (the translated sentence, the poem’s next verse).

- Positional Embedding: positional encoding helps Transformers incorporate information about the order of tokens in a sequence, allowing them to better understand the sequential structure of the input data. This is essential for tasks like natural language processing where the order of words in a sentence can significantly impact its meaning.

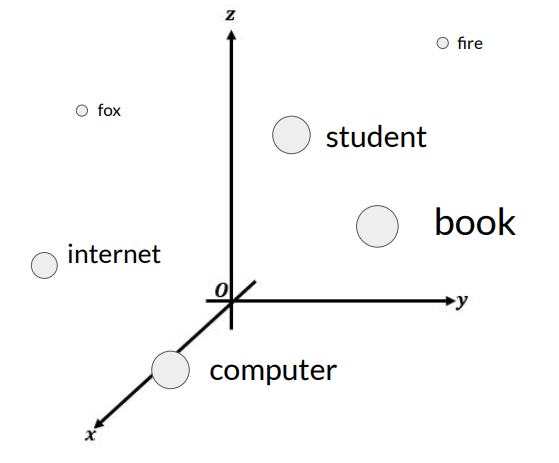

- Embedding: embedding refers to the representation of words, phrases, or sentences as vectors in a high-dimensional space. Word embeddings, in particular, have become a fundamental concept in the field.

a. Word Embeddings: These are numerical representations of words, where words with similar meanings are mapped to vectors that are close to each other in the embedding space. Word embeddings capture semantic relationships between words and are often used to represent words in a way that is more suitable for machine learning models.

b. Sentence Embeddings: Beyond word embeddings, there are techniques to generate representations for entire sentences or paragraphs. These embeddings aim to capture the overall meaning or context of a piece of text.

Attention-

- Attention: This is the magic sauce of the Transformer. Unlike previous models that processed words sequentially, the Transformer can pay attention to all words in an input simultaneously. It uses a sophisticated mechanism to calculate the importance of each word relative to the current one being processed, allowing it to understand complex relationships and contexts.

- Self-Attention: This powerful technique within the encoder allows the Transformer to understand how words within a sentence relate to each other. By paying attention to surrounding words, the Transformer can grasp context, sarcasm, and even double meanings.

- Multi-Head Attention: This adds another layer of complexity and nuance to the attention mechanism. Instead of just one “head,” the Transformer has multiple attention heads, each focusing on different aspects of the relationships between words. This allows it to capture various types of information, like grammatical structure and semantic similarities.

Different variations of Transformer architecture:

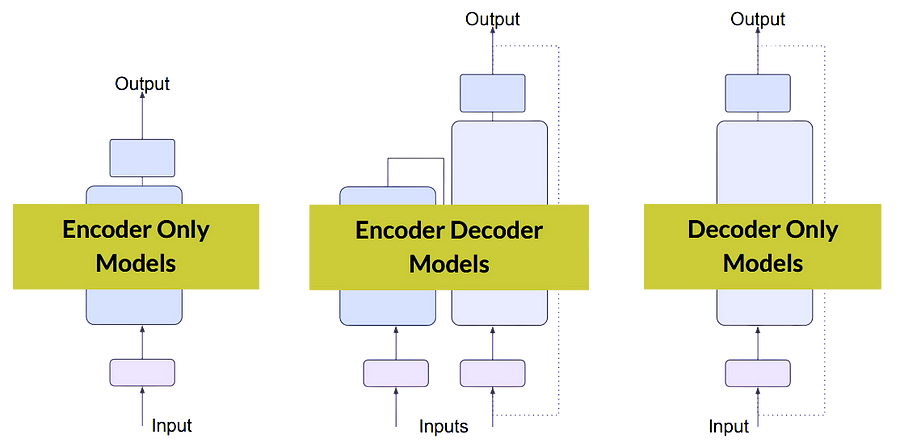

There are three main types of LLMs, each with its own strengths and weaknesses:

Encoder-only Models:

Encoder Only Models also known as “Autoencoding Models.” Encoderonly Models are pre-trained on masked language modeling (MLM). Consists solely of an encoder stack, lacking a decoder component. These LLMs Prioritize understanding and encoding input sequences into dense representations, rather than generating new sequences.

MLM Objective: Reconstruct text.

Here tokens in sequence are randomly masked with the objective of predicting that token to reconstruct the original sequence.

This works bi-directionally.

e.g. “The mother teaches the son.” — — — → “The mother <mask> the son.”

Auto-encoding models create a bidirectional representation of the input sequence meaning the model has the full context of tokens and not just tokens that come before masked tokens.

Applications: Excel in tasks that emphasize comprehension and knowledge extraction, such as:

- Question answering

- Text classification

- Sentiment analysis

- Named entity recognition.

Well Known Models:

- BERT: Bidirectional Encoder Representations from Transformers

- ROBERTA: Robustly Optimized BERT Approach

Decoder only Models:

Decoder-only models are also known as the “Autoregressive” model. Decoder-only models are pre-trained using causal language modeling (CLM).

Objective: Predict The Next Token

The primary objective of Decoder-only models is to predict the subsequent token based on the preceding sequence of tokens. This approach is often referred to as ‘full language modeling’ by researchers.

These models employ an autoregressive method that masks the input sequence, allowing the model to only consider the input tokens leading up to the current position.

e.g. “The mother teaches the son.” — — — → “The mother <mask> — — — —”

Decoder-only models operate in a unidirectional manner. This means they process the input sequence from left to right and use the ‘unidirectional context’ to predict the next token. This context includes all the preceding tokens in the sequence but none of the subsequent ones. This characteristic is fundamental to the autoregressive nature of these models.

Applications:

- Text Generation: Decoder-only models are extensively used for generating human-like text. This can be applied in various domains like creative writing, content creation, and more.

- Chatbots: They are used in the development of advanced chatbots and virtual assistants that can understand and respond to user queries in a conversational manner.

Well Known Models:

- GPT (Generative Pre-trained Transformer): GPT and its subsequent versions, GPT-2 and GPT-3, are some of the most popular decoder-only models. They focus on autoregressive language modeling and text generation.

- PaLM: Introduced by Google, PaLM is a 540 billion parameter-dense decoder-only Transformer model. It has demonstrated state-of-the-art few-shot performance across most tasks3.

Encoder-Decoder Models/ Sequence to Sequence Models:

Encoder-decoder models also referred to as Sequence-to-Sequence (Seq2Seq) models, utilize both the Encoder and Decoder components of the original transformer architecture.

A notable example of a sequence-to-sequence model is T5, a popular transformer-based architecture. In the training process of T5, the encoder is trained using span corruption, a technique involving the masking of random sequences of input tokens. These masked sequences are then replaced with a unique sentinel token, such as [MASK]. This innovative approach enhances the model’s ability to learn contextual relationships and dependencies within the input sequences, contributing to its overall effectiveness in various natural language processing tasks.

e.g.

The mother teaches the son. → The mother <Mask> <Mask> son. → The mother <x> son.

Sentinel tokens are special tokens added to the vocabulary but do not correspond to any actual words from the input text.

Then decoder is tasked with reconstructing the mask token sequence auto regressively. The output is sentinel tokens followed by predicted tokens.

Applications:

- Text Summarization: Seq2Seq models are extensively used for text summarization, where the input sequence (full text) is encoded into a fixed-size context vector, and then a decoder generates a concise summary based on that context vector. This application is valuable for quickly extracting key information from lengthy documents or articles.

- Translation: They are used for machine translation, where Seq2Seq models play a crucial role in converting text from one language to another. The encoder processes the input sentence in the source language, and the decoder generates the corresponding translation in the target language. This application is instrumental in breaking down language barriers and facilitating cross-language communication.

Well Known Models:

BART (Bidirectional and Auto-Regressive Transformers): BART is a sequence-to-sequence model introduced by Facebook AI Research (FAIR). It combines ideas from autoencoders and transformers to improve the generation of coherent and fluent text.

Flan-T5 (Flan Text-to-Text Transfer Transformer): Flan-T5 is a powerful text-to-text transformer model developed by Google Research. It builds upon the T5 architecture. Flan-T5 offers several advantages in training and execution, making it a promising choice for diverse NLP tasks.

Conclusion:

At the end of this first part where we learned the basics of Large Language Models, it’s like we’ve built the groundwork for a big adventure. We understood how these models talk, learn, and use lots of information to be smart.

Now, it’s time for the exciting part! In the next part, we’re going to see what these models can really do. They can write interesting things and even change how things work in different fields.

Stay with me, and we’ll explore how these models can make cool things happen through a process called fine-tuning. It’s like giving these models special training for specific tasks, making them super useful. Imagine customizing them for writing content, helping in customer support, or transforming data analysis. We’re about to unlock a world of new possibilities with the magic of language, and in the next part, we’ll learn how to make these models work specifically for us. So, let’s go together into the practical side of Large Language Models and see how they can make our future awesome!

Thanks for reading this article! Don’t forget to clap 👏 or leave a comment 💬! And most importantly subscribe and follow me (Vishal) to receive notification of my new articles on Data Science and Statistics.